Hi, welcome to my on-line course “machine learning for dummies”. Usually courses like this are written by an expert. This is not my case, though. I do not know anything about machine learning. I have spent more than 10 years working with server side Java, moving data here and there. I have even worked as REST API architect or Scrum master. Yes, the Scrum that eats programmers soul. But no machine learning whatsoever apart from image recognition course at the university 12 years ago and Machine Learning course on Coursera few months ago. But now I have decided that I will learn ML, so I quit my job, declined an interesting offer and started to learn ML.

Since Deep learning is the current hype, I have started with Deep Learning course at Udacity. Deep learning course from Google right in my living room? Cool.

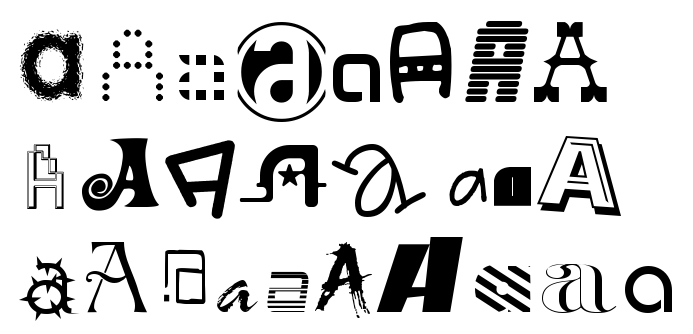

And the course went quite well until the very first exercise which was about recognizing notMnist dataset

You have this “letters” and you want to recognize them. Sounds like a job for machine learning, right?

Installing Python libraries

To finish the exercise, you need to know something about machine learning and Python first. I do not know neither. Python is quite simple, if you know programming you will learn it in no time. The most complex task is the installation. I have spent like six hours trying to install all the required libraries. If you do not know Python, you just have no idea if you want to use OS specific packages (apt, brew), pip, easy_install or whatnot. The thing is, in Java you have projects that describe their dependencies using Maven or Gradle. So if you want to work on Java project, you just execute ‘mvn test’, it downloads half of the Internet and you are done. But in Python you have no project so you have to download all libraries yourself. What’s more, those libraries depend on each other and you need to have correct versions. Well I downloaded and recompiled half of the Internet multiple times, every time getting different conflict (most often between matplotlib and numpy). Luckily, people using Python know that their package systems suck so they provide Docker images with all the libraries installed. I have used the image to figure out which version of libraries work together. Today, I was trying to install it again on Mac OS and I was able to do it under an hour using Anaconda so maybe this Python distribution system works better than the others.

But once you have Python with all the libraries installed, you get your superpowers. Thanks to numpy, you can easily work with matrices, thanks to matplotlib you can plot charts and thanks to scikit-learn you can do machine learning without knowing much about it. That’s what I need.

Logistic Regression

So back to our notMnist example. After I have reviewed most of the Coursera course I have learned that in machine learning you need features – the data you will use in your algorithm. But we have images, not features. The most naive approach is to take the pixels and use them as features. Images in notMNIST are 28×28 pixel, so for each image we have 784 numbers (shades of gray). We can create 784 dimensional space and represent each image as one point in this space. Please think about it for a while. I will create really high dimensional space and represent each sample (image) by one point in the space. The idea is that if I have the right set of features, the points in this space will became separable. In other words, I will be able to split the space into two parts, one that contains points representing letters A and another part with all other letters. This is called Logistic Regression and there are implementation that do exactly that. Basically you just need to feed the algorithm samples with correct labels and it tries to figure out how to separate those points in the high-dimensional space. Once the algorithm finds the (hyper)plane that separates ‘A’s from other letters, you have your classifier. For each new letter you just check in which part of the space the corresponding point is and you know if it’s an A or not. Piece of cake.

But if you think about it, it just can not work. You just feed it raw pixels. Look at the images above, you are trying to recognize letter ‘A’ just based on values of the pixels. But each of the ‘A’s above is completely different. The learning algorithm just can not learn to recognize the letter just based on individual pixels. Or can it?

What’s more, I am trying to find a flat plane that would separate all As in my 784 dimensional space from all other letters. What if As in my crazy space form a little island which is surrounded by other letters. I just can not separate them by a flat plane. Or can I?

Well surprisingly I can and it is quite easy to implement

from sklearn import linear_model

clf_l = linear_model.LogisticRegression()

# we do not want to use the whole set, my old CPU would just melt

size = 20000

# images are 28x28, we have to make a vector from them

tr = train_dataset[:size,:,:].reshape(size, 784)

# This is the most important line. I just feed the model 20k examples and it learns

clf_l.fit(tr, train_labels[:size])

# This is prediction on training set

prd_tr = clf_l.predict(tr)

print(float(sum(prd_tr == train_labels[:size]))/prd_tr.shape[0])

# prints 0.86 we are able to recognize 86% of the letters in the training set

# let's check if the model is not cheating, it has never seen

# the letters from the validation set before

prd_l = clf_l.predict(valid_dataset.reshape(valid_dataset.shape[0], 784))

float(sum(prd_l == valid_labels))/prd_l.shape[0]

# Cool 80% matches

Even this method that just can’t work is able to classify 80% of letters it has never seen before.

You can even easily visualize the letters that did not match

import numpy

import matplotlib.cm as cm

def num2char(num):

return chr(num + ord('a'))

# indexes of letters that do not match

# change the last number to get another letter

idx = numpy.where(prd_l != valid_labels)[0][17]

print("Model predicts: " + num2char(clf_l.predict(valid_dataset[idx].reshape(1, 784))))

print("Should be: " +num2char(valid_labels[idx]))

%matplotlib inline

plt.imshow(valid_dataset[idx,:,:] + 128,cmap = cm.Greys_r)

This should have been G but the model has classified it as C. Understandable mistake. Another letter is even better.

This obvious ‘f’ has been incorrectly classified as A. Well, to me it looks more like an A than an f. The thing is that even this simple naive model mostly does “understandable” mistakes only. Either the misclassified letter is not a letter at all or the classifier makes mistake because the letters are really similar. Like I and J, I and F and so on.

So what we have learned today? First of all that even stupid model on stupid features can work surprisingly well. Secondly that Python libraries are not easy to install but easy to use. Here I have to admit that similar code in Java would have been just crazy.

Next time we will try SVM and maybe try to figure out some better features to make our model even better.

Thanks, man. Didn’t have time to dig into this deeply, so you’ve helped me a lot!